WEB BROWSER

MOBILE BROWSER

WEB BROWSER

MOBILE BROWSER

OVERVIEW

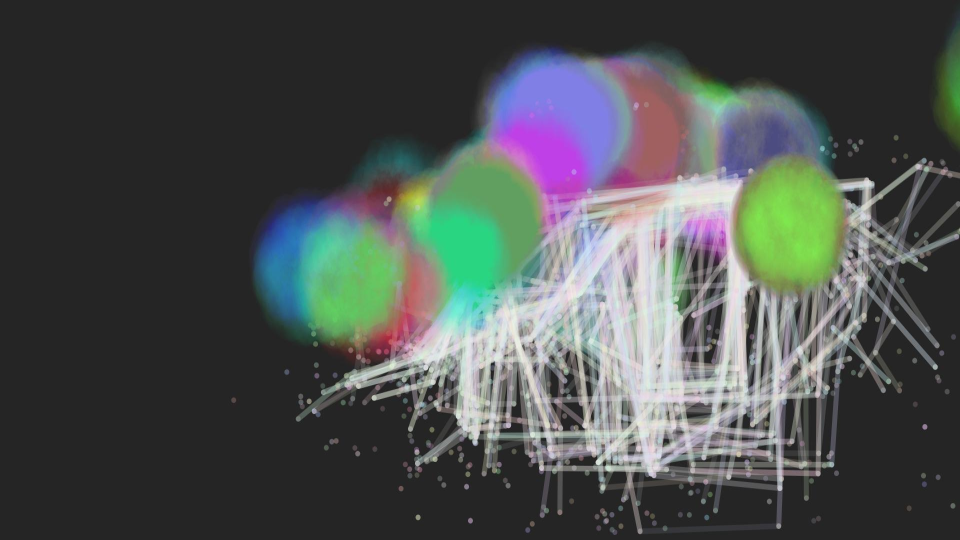

Hanul–Computer Interaction is an interactive system that transforms users’

body movements into real-time graphical reflections. Built on machine

learning-based pose estimation, the interface allows users to co-create

ephemeral visuals through embodied interaction with an AI model.

“Draw Yourself: A Digital Dance”

MOTIVATION

The project explores collaborative creativity between designers and

machines. Through a series of iterative experiments, the designer (Hanul)

and a machine learning model (nicknamed “Computer”) establish a

co‑dependent creative relationship. The resulting system reflects not only

user motion but also the dynamics of human–machine co‑authorship.

It further critiques the systemic reduction of human identity

into data. In an age of biometric surveillance and predictive profiling,

HCI challenges dominant narratives by rejecting data retention and

emphasizing anonymity and abstraction.

APPROACH

The system uses PoseNet, a real‑time pose detection tool from the ml5.js

library, powered by TensorFlow.js. No identifiable data is collected;

instead, blurred and anonymized visuals are generated to safeguard user

privacy. This aesthetic choice counters the extractive nature of

surveillance‑based design and promotes critical discourse on data ethics.

“Pixels & Privacy: Dance Your Data Away”

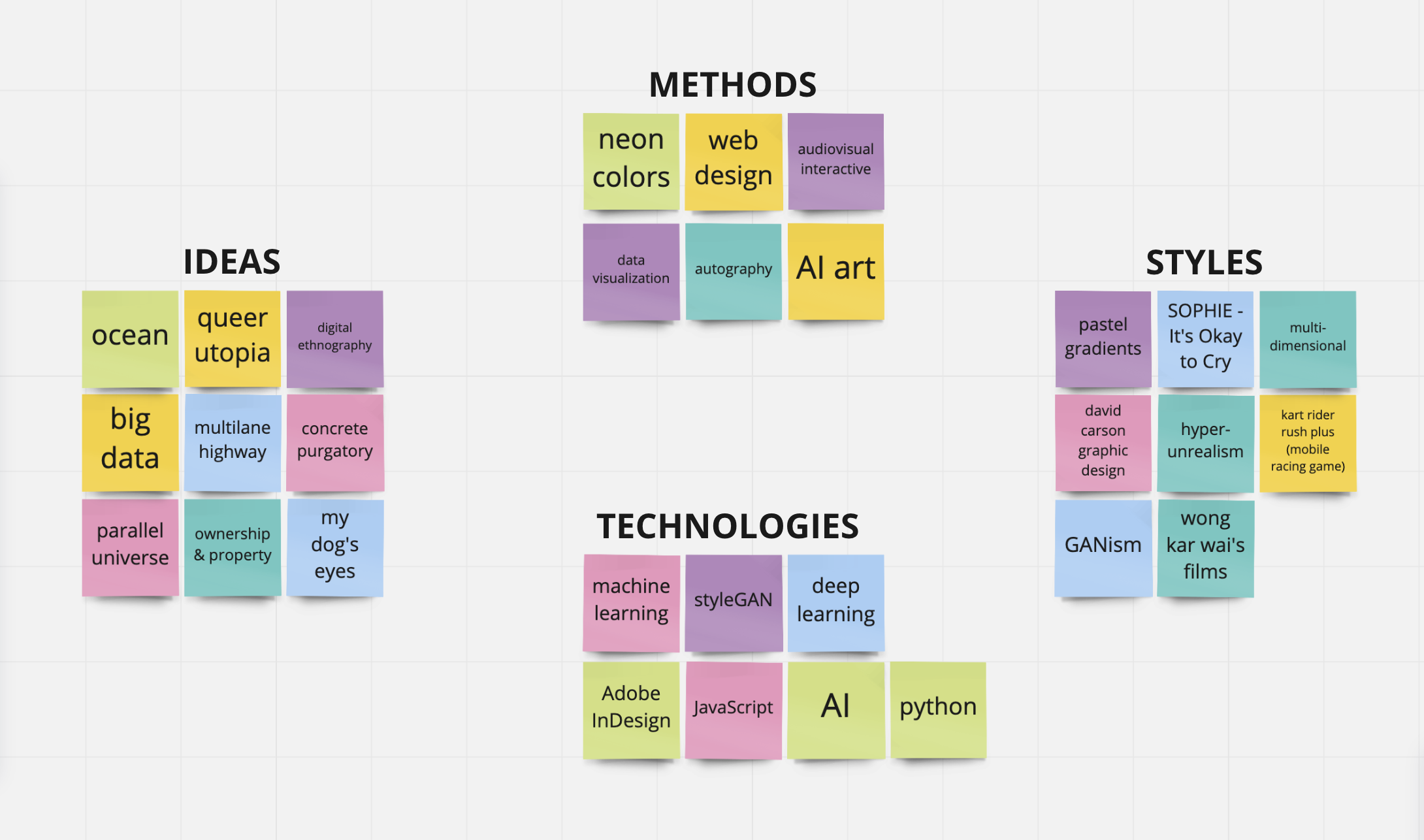

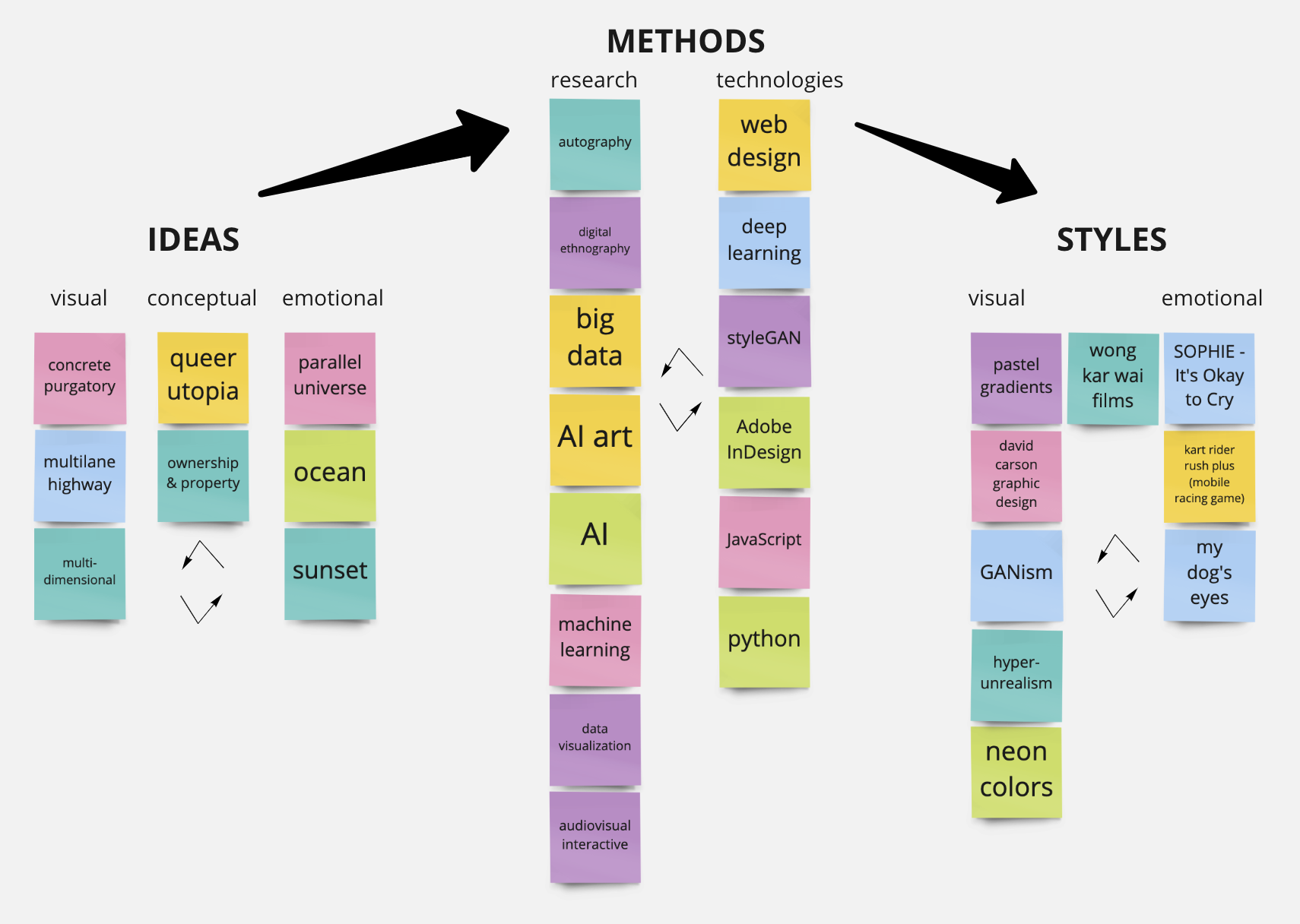

IDEATION

CREATIVE METHODOLOGY

The project prioritizes process over product. Each prototype is treated as a sketch, a generative moment within an ongoing inquiry into human–machine interaction. Hanul positions themself not only as a maker but as a curator of experience and conversation.

ETHNOGRAPHIC FRAMING

The research is grounded in a hybrid of autoethnography and digital ethnography, informed by Actor‑Network Theory (ANT). ANT frames the relationship between human and machine as an ontologically symmetrical network of actants. The project becomes a site for exploring authorship, agency, and material expression across human and non‑human actors.

1. "FAMILY PHOTO‑PAINTING ALBUM"

This experiment humanizes GANs by applying them to emotionally significant

images. It transforms neural style transfer into a sentimental act of

digital memory‑making.

Model: Generative Adversarial Networks (GAN)

Platform: RunwayML (Style Transfer)

Inputs: Selected original photos of myself & my dog

Styles: Famous Paintings; Cubism, Gogh, Hokusai, Kandinsky, Monet, Picasso,

Wu Guanzhong

2. "IN PIXELS"

This visual poetry project explores how classical literature can be

reinterpreted by neural networks, bridging ancient verse with generative

aesthetics.

Model: Vector Quantized Generative Adversarial Network and Contrastive Language–Image

Pre‑training (VQGAN+CLIP)

Platform: NightCafe (Text to Image)

Inputs: Sappho. "Poems of Sappho" Translated by Julia Dunbnoff. University

of Houston.

INTERACTION DESIGN

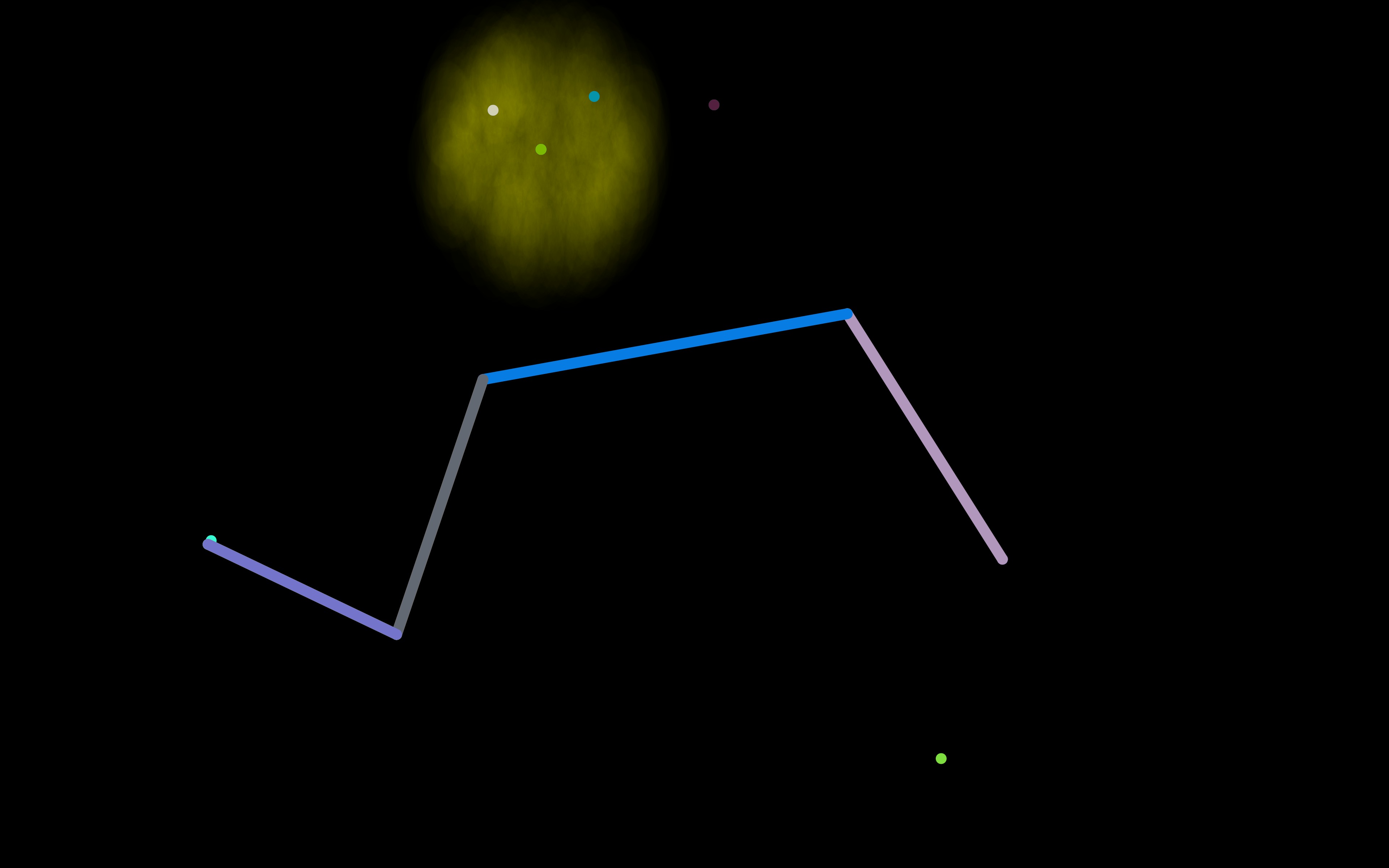

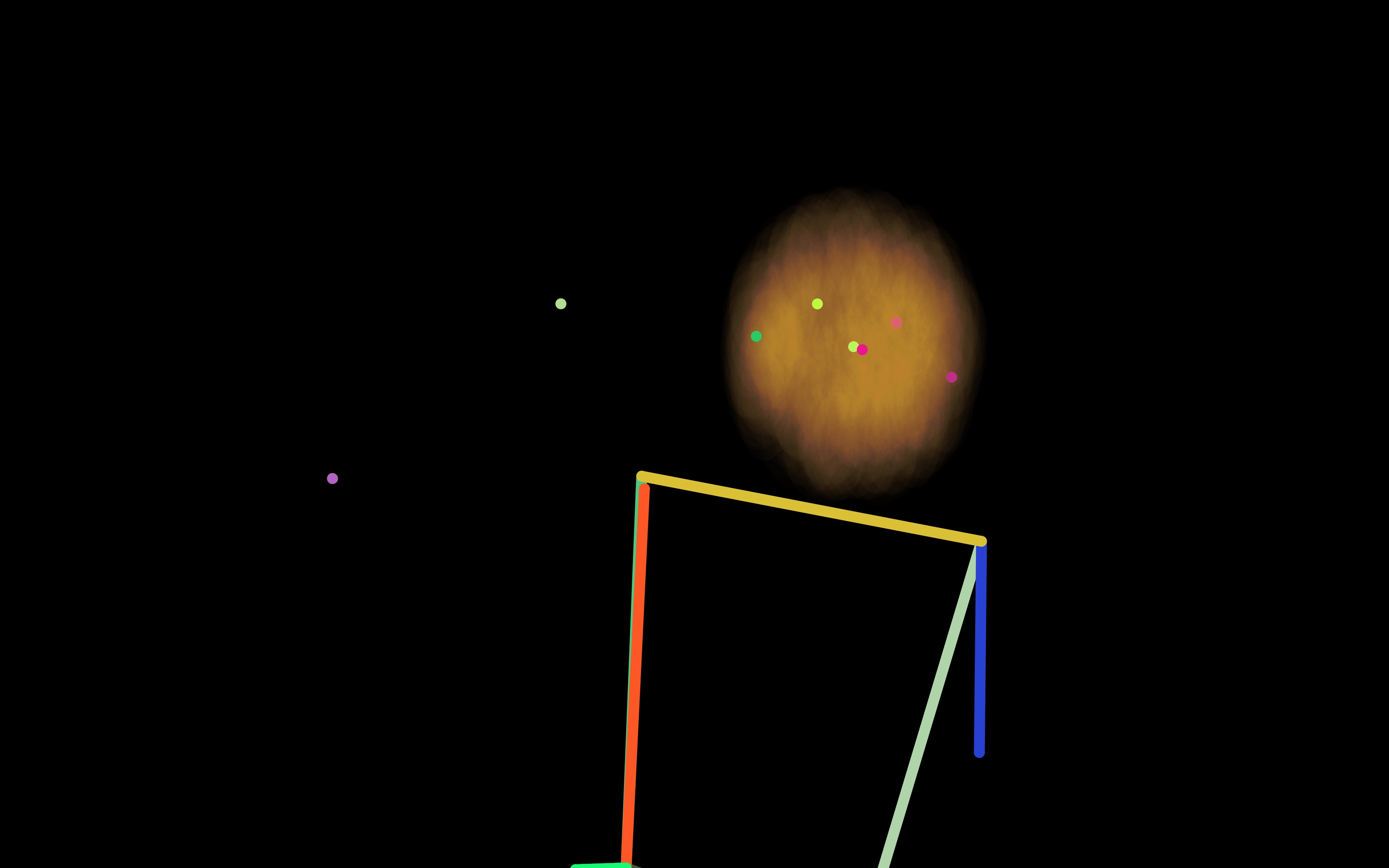

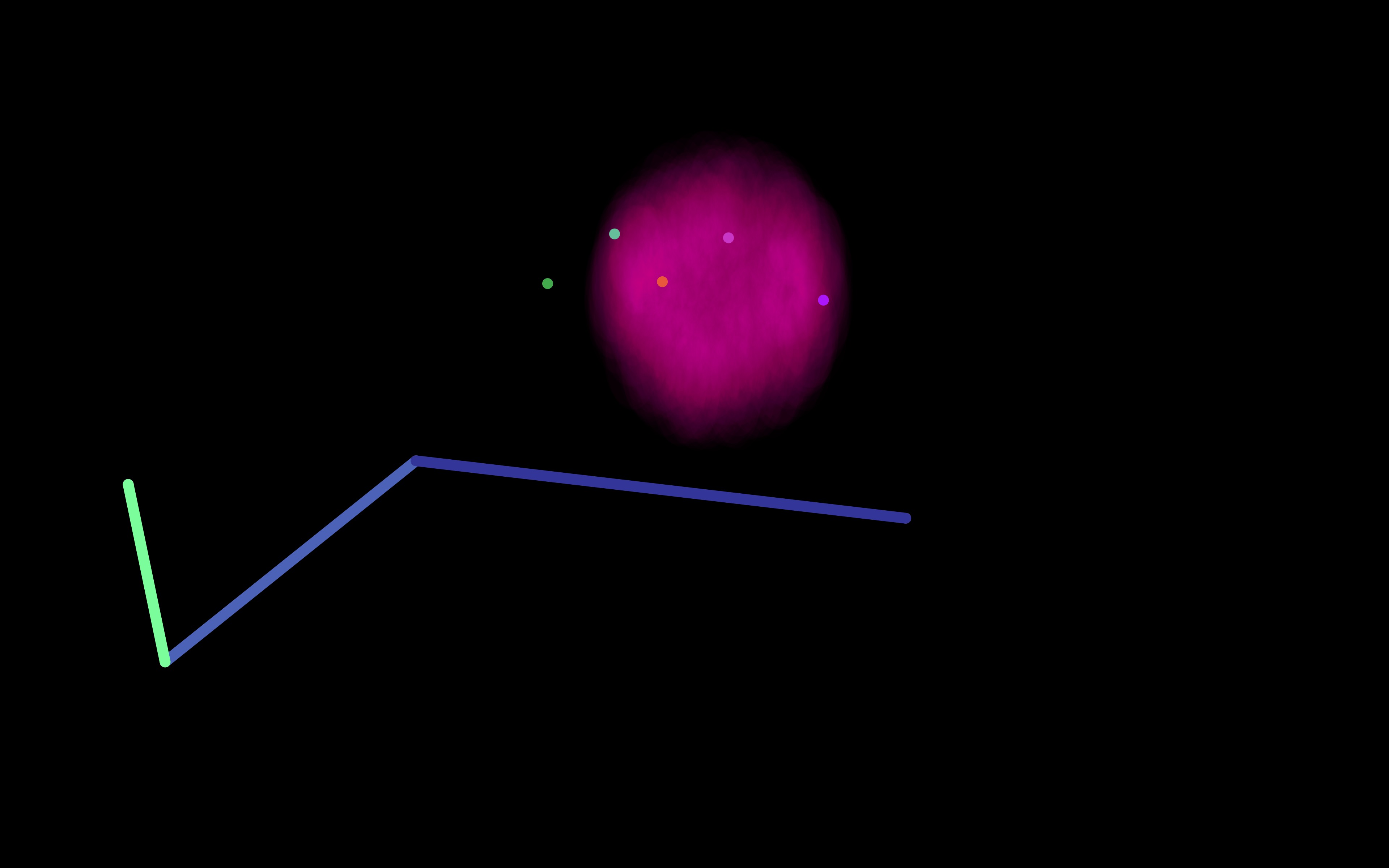

The interface is simple yet responsive. Participants activate the system through movement, becoming both performer and co‑author. The screen responds in real time, creating a closed feedback loop where body and algorithm shape each other.

TECHNICAL INFRASTRUCTURE

The browser‑native application relies on PoseNet via ml5.js to detect motion and translate it into visual output. No data is stored. This lightweight, accessible setup supports live rendering without backend dependencies, reinforcing user control.

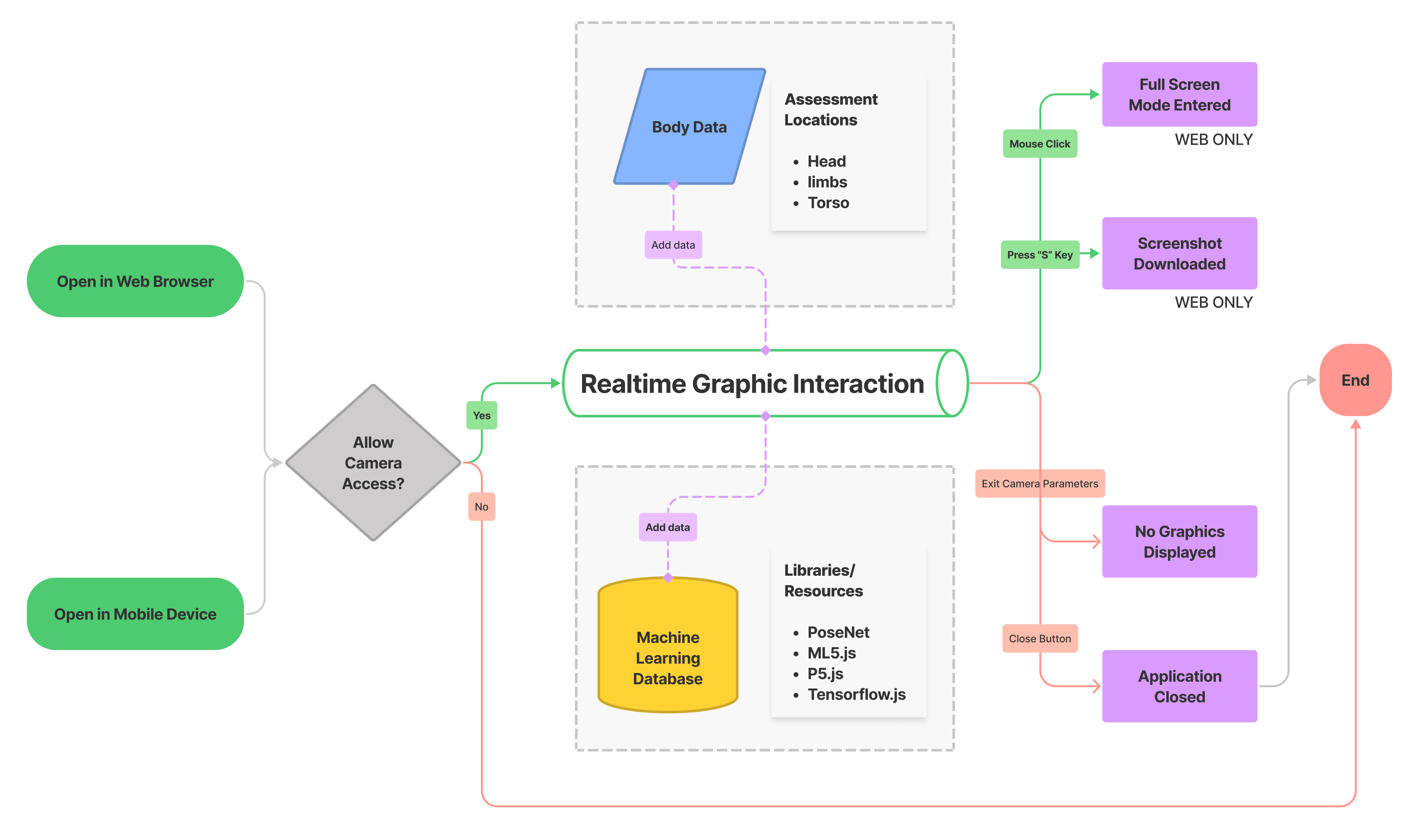

USER FLOW

1. Launch the application via web or mobile browser

2. Grant camera access

3. Move freely as body data is captured in real time

4. Visual feedback appears onscreen in response to motion

5. (Web only) Option to enter fullscreen mode and save screenshots

TESTING ENVIRONMENT

Testing was conducted in‑person across various indoor and outdoor settings with stable internet access. As the experience relies on real‑time visual feedback, it is not fully accessible to users with visual impairments. While participants with limited vision can engage with the movement component, they may not be able to perceive the visual outcomes directly.

VISUAL DISCLAIMER

This experience includes flashing imagery and rapidly changing colors. Viewer discretion is advised for individuals with photosensitive epilepsy or visual sensitivities.

STYLE 1

STYLE 2

AGENCY AS INTERDEPENDENCE

Agency, as defined in conventional terms, refers to the capacity to act or

exert influence. In this work, agency is reframed as a distributed

property shared between human and machine. Rather than treating the

machine as a passive tool or the human as the sole originator, the project

establishes a mutual responsiveness: the user’s body generates movement,

which the machine translates into visual language, which then re‑informs

the user’s motion in a continuous feedback loop. Neither the human nor the

machine can complete the artwork independently. Their co‑presence is

essential.

Actor Network Theory supports this reframing by positing that

humans are not ontologically privileged over non‑human actors. In this

system, the AI model’s role extends beyond execution; it participates in

the interpretation and modulation of user behavior. This reciprocal

shaping reflects ANT’s assertion that “actors gain strength only through

their alliances” and that agency is always enacted through dynamic,

interrelated networks.

AUTHORSHIP AS MUTUAL MEDIATION

The project also calls into question the assumption of singular

authorship. While the human initiates and curates the experience, the

machine interprets and transforms physical motion into visual output. This

relationship is not one‑directional or hierarchical; rather, it embodies

mutual authorship. Each actant mediates the behavior of the other, and the

final output is a product of their interaction rather than the expression

of a single will.

ANT further articulates that actants are transformed through

encounters and mediation. In this collaboration, the human is shaped by

the constraints and possibilities of the system, while the machine is

constantly updated by user feedback. Authorship, then, is not a fixed

status but a processual phenomenon that is emergent, negotiated, and

contingent on real‑time engagement.

ASSEMBLAGE AND TEMPORAL ONTOLOGY

At the core of this system is what ANT refers to as an assemblage: a

temporary constellation of human and non‑human components whose

interactions give rise to a particular event or effect. In HCI, the

artwork exists only through the convergence of user, machine, code, and

interface in a specific moment of live interaction. Once this performance

ceases, so does the artwork.

This assemblage‑based model resists the idea of the work as an

object with intrinsic meaning or authorship. Instead, it positions the

artwork as an effect of relational activity, a momentary crystallization

of intent, gesture, and computation. In doing so, the project advocates

for a new ethic in AI‑mediated design, grounded not in mastery or

ownership but in reciprocity, responsibility, and entanglement.