Hanul-Computer Interaction (HCI)

Machine Learning + Visual Image Data + Human Pose Estimation

A Digital-Physical Experience with Movement-Based Interactivity Using Body Detection Technologies, a Discourse on the Collaborative Creative Practices of Designers and Machines

2021-22

EXPLORE MOBILE VERSION

Tool | P5.js, Ml5.js

Code | JavaScript

Parsons Design & Technology Thesis Show 2022

I. CONCEPT

WHAT

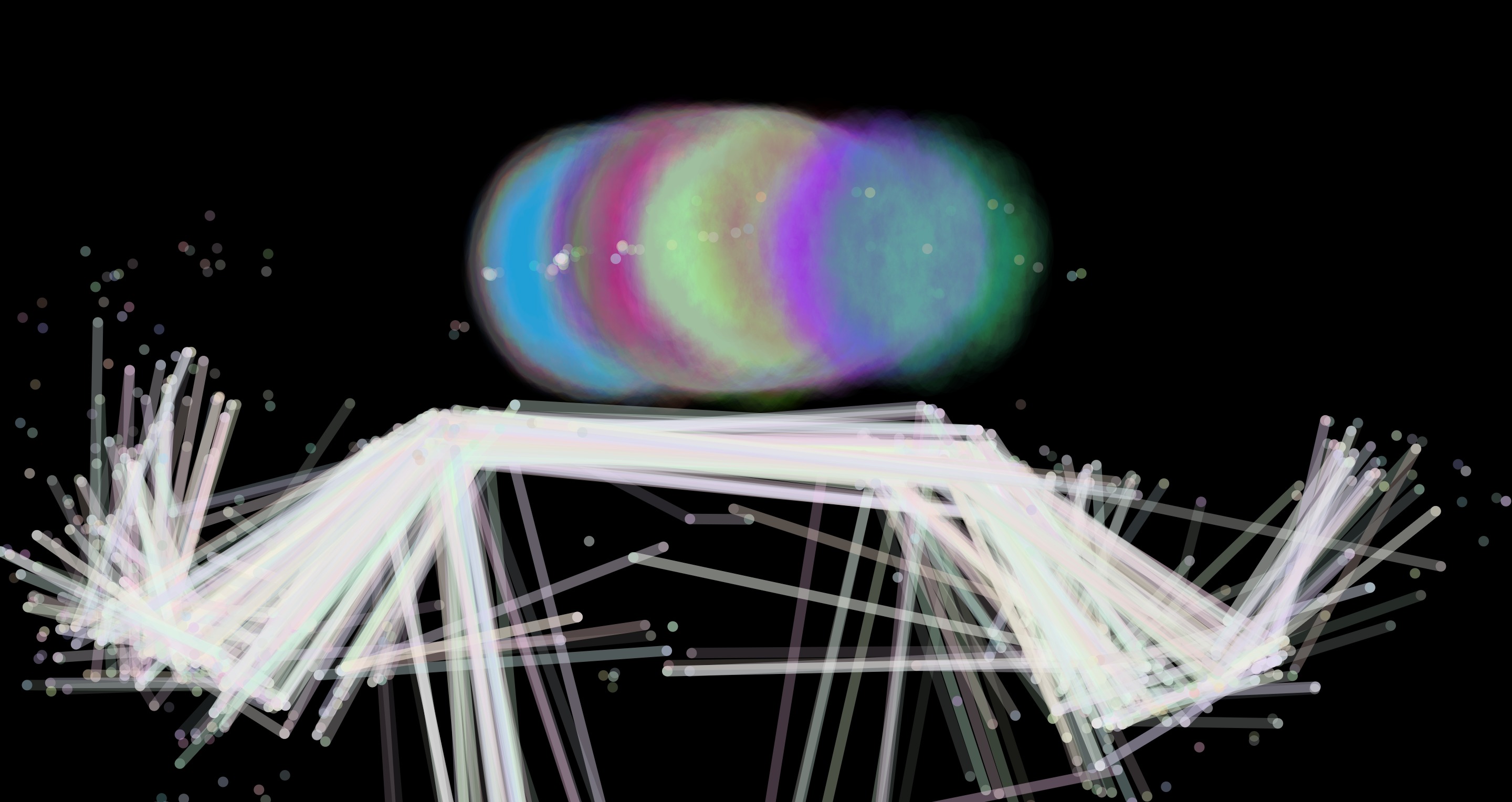

Hanul-Computer Interaction (HCI) is an interactive digital-physical experience that utilizes movement-based interactivity and machine learning for human pose detection. Through a web and mobile interface, users can draw real-time graphic reflections of their bodies directly onto the screen.

“Draw Yourself: A Digital Dance”

WHY

Focusing on process, this work explores the collaborative creativity between designers and machines. Through a series of machine learning projects, designer Hanul and a machine learning model named "Computer" developed an interdependent and symbiotic partnership. The real-time interaction between the user and the generated graphics reflects the dynamic interplay between designer and machine. This project aims to contribute to the ongoing discourse on the role of AI and machine learning in the artistic and design fields.

This project investigates the melancholic undercurrent of humanity's transformation into digital data. Fueled by the rapid development of facial recognition and body detection technologies, we are increasingly represented and categorized as collections of data points. This work serves as a critique of the surveillance, censorship, and ownership of this digitized human information. By actively rejecting data collection and individual profiling, it challenges the power dynamics inherent in these pervasive technologies.

HOW

HCI leverages PoseNet, a human pose detection tool from the ml5.js library. Powered by a pre-trained TensorFlow.js model, PoseNet captures users' real-time body movements without capturing identifiable details. The intentionally blurred graphic style further ensures participant anonymity. This abstraction resists the reduction of users to data points, a practice commonly associated with facial recognition and body detection technologies. By interacting with the interface, users organically contribute to the critical discourse surrounding data ownership and individual privacy.

“Pixels & Privacy: Dance Your Data Away”

II. PROCESS

Approach

This project prioritizes the journey of creation over the final product. Like an artist studying their subject through a series of sketches, this work delves into the process of exploring human-machine collaboration. Here, I act not only as the creator, but more importantly as the curator, shaping the user experience and guiding the exploration.

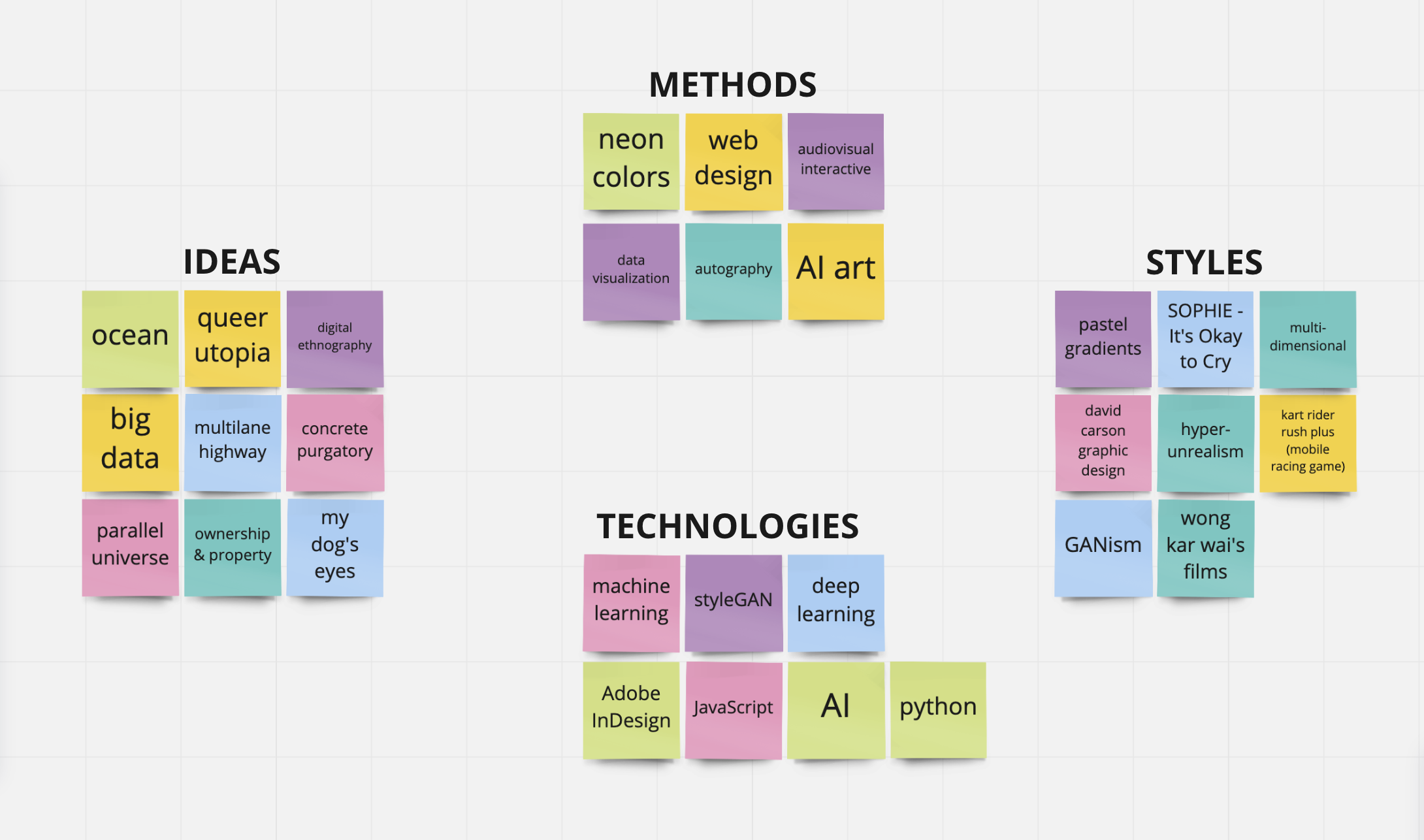

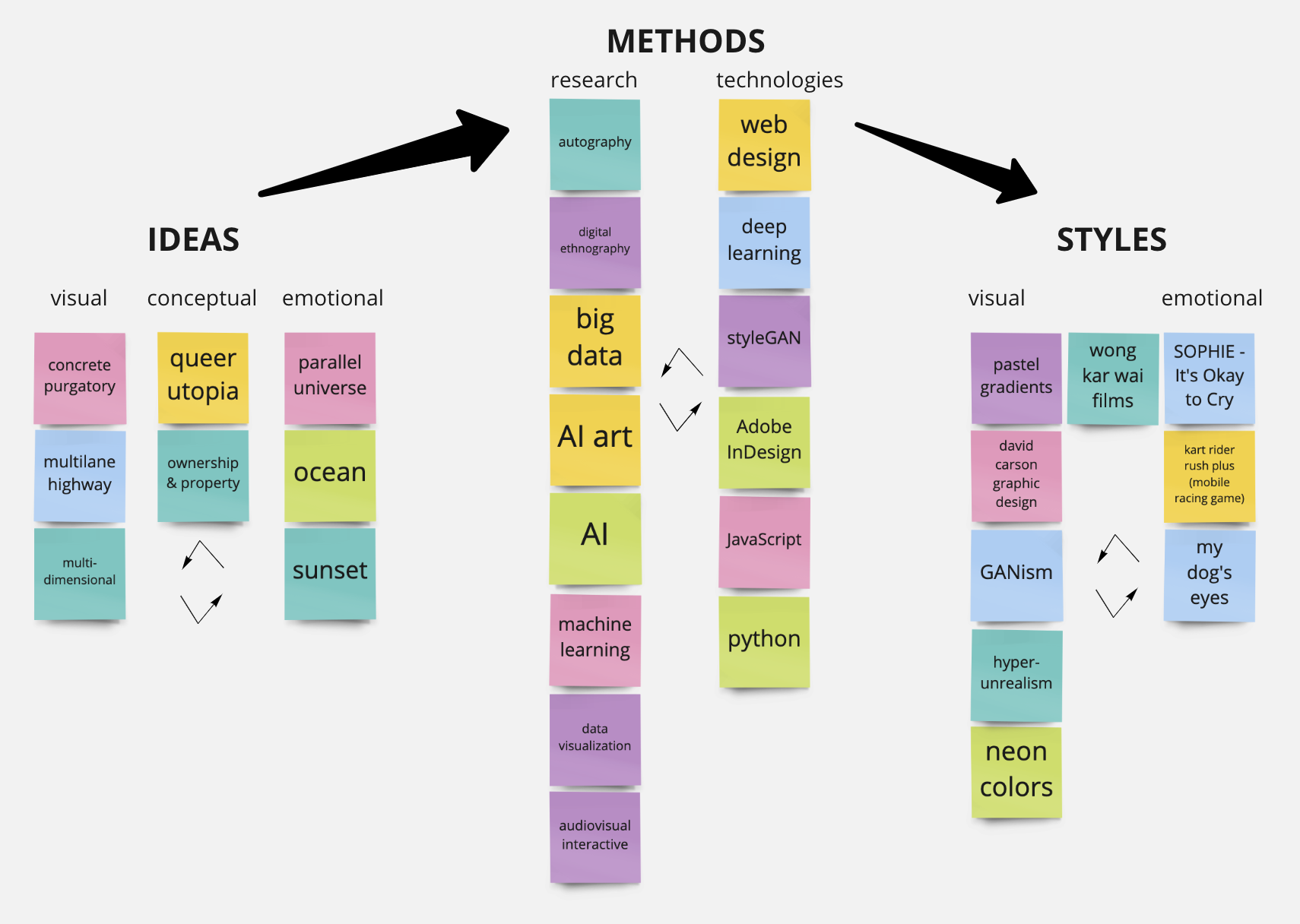

Brainstorm

Research

This project employs a combined autoethnographic and digital-ethnographic approach to explore the collaborative working experience with my computer through the lens of Actor-Network Theory (ANT). The final product serves as a visual and computational expression of this investigation and its resulting contemplations. My research focuses particularly on the questions of agency and authorship that emerge from my intimate relationship with the machine, blurring the traditional boundaries between human and non-human actors.

III. PROJECTS

1. Family Photo-Painting Album

◍ Platform: RunwayML (Style Transfer)

◍ Input: Selected original photos of myself & my dog

◍ Style: Famous Paintings; Cubism, Gogh, Hokusai, Kandinsky, Monet, Picasso, Wu Guanzhong

This project delves into the emotional potential of digital creation through an exploratory practice. In a world saturated with computer-generated imagery, it seeks to reframe Generative Adversarial Networks (GANs) by imbuing them with a sense of sentimentality. Rather than simply replicating existing styles, this creative production leverages the intricate and intimate visual capabilities of AI and machine learning to design, iterate, and refine a unique set of personal visual assets. This process aims to spark a deeper appreciation and a more imaginative engagement with the potential of AI-generated art.

2. In Pixels

◍ Technology: Vector Quantized Generative Adversarial Network and Contrastive Language–Image Pre-training (VQGAN+CLIP)

◍ Platform: NightCafe (Text to Image)

◍ Input: Sappho. "Poems of Sappho" Translated by Julia Dunbnoff. University of Houston.

IV. PRODUCT

Design

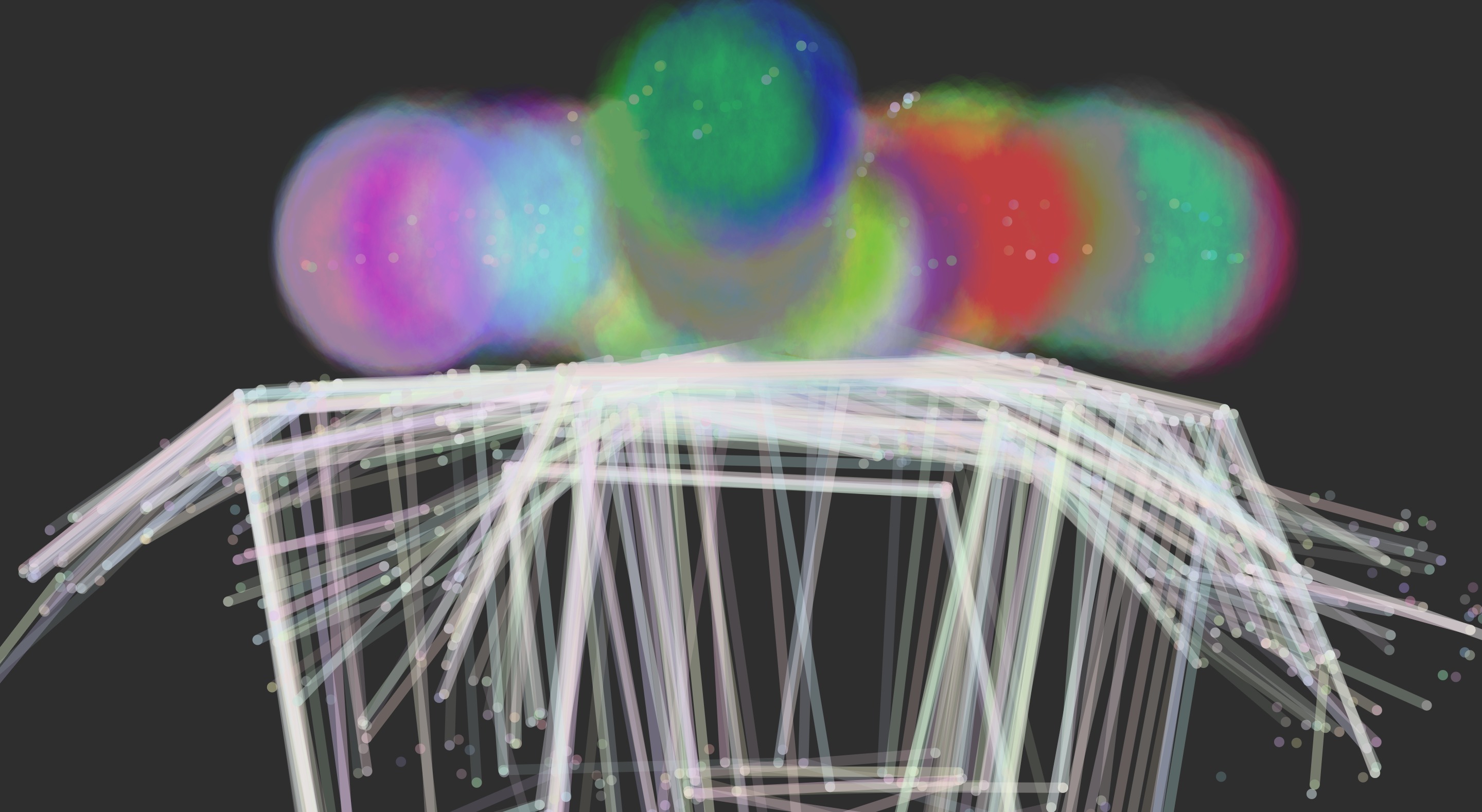

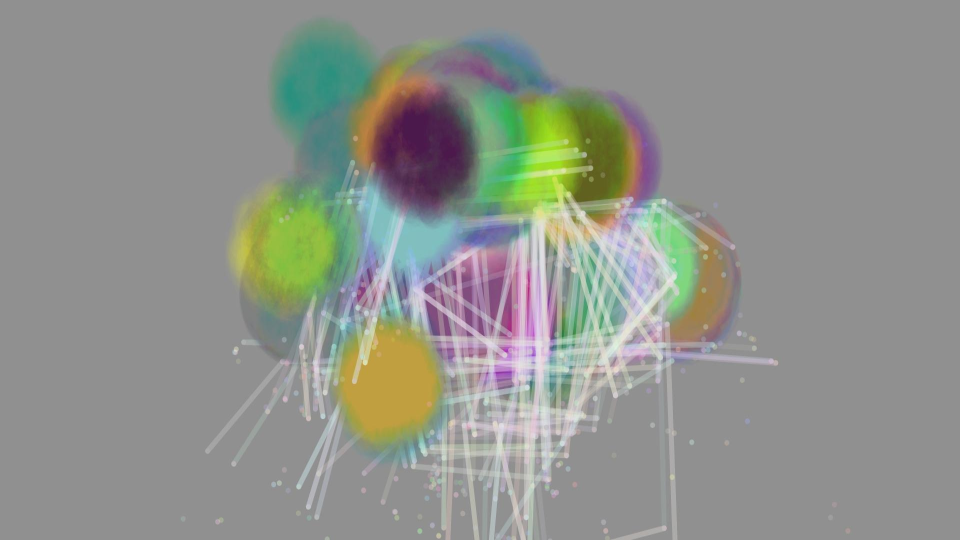

The interactive interface serves as a reflection of the designer's collaborative experience with the machine. By engaging with the product, participants become embodied participants in this collaborative process. Through their physical motions, users directly co-create with the machine and are invited to reflect on this interrelationship. The design deliberately prompts questions regarding materiality and virtuality: How do physical bodies interact with software interfaces, and how are these material movements translated and manifested in the virtual realm?

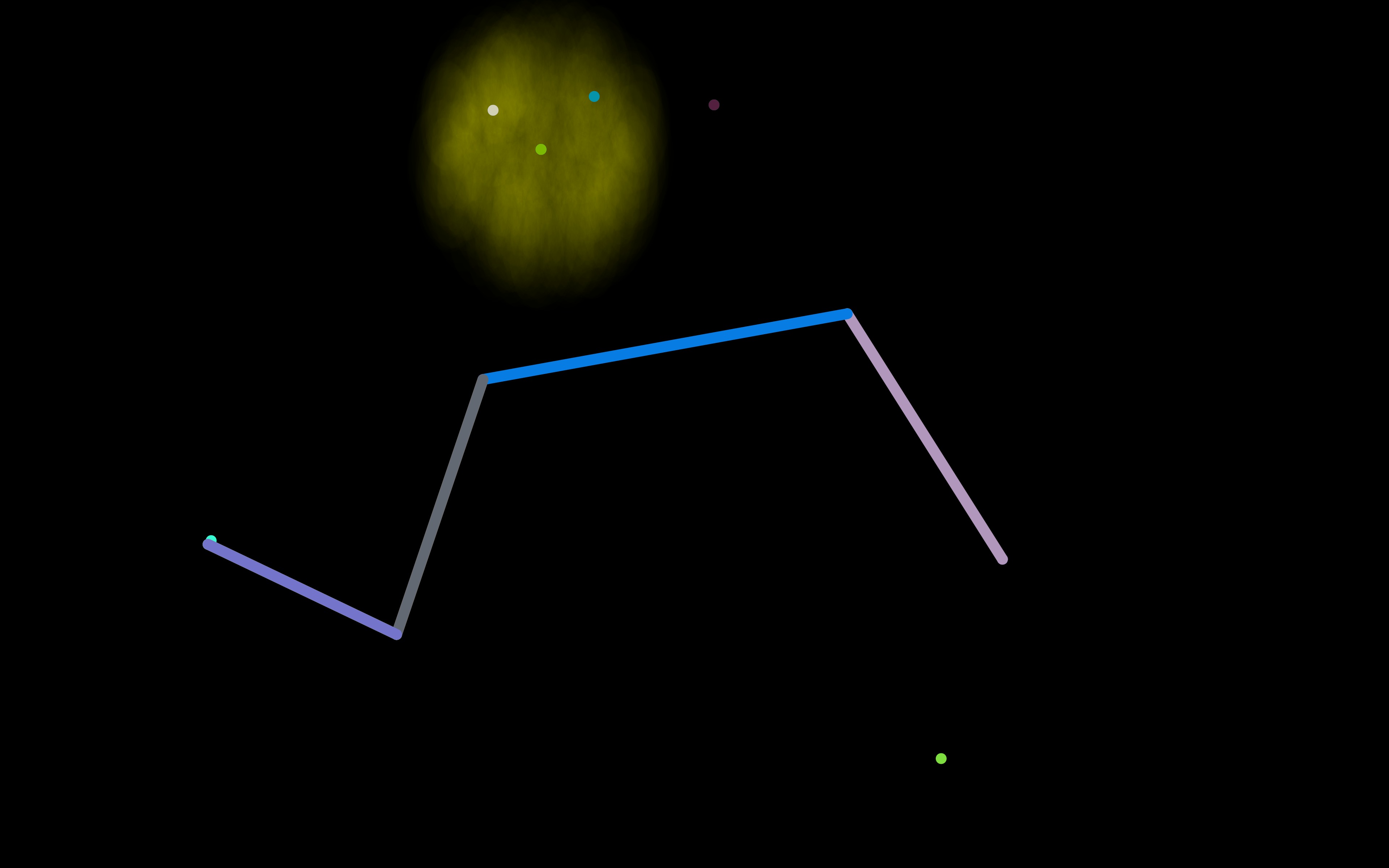

Prototype I

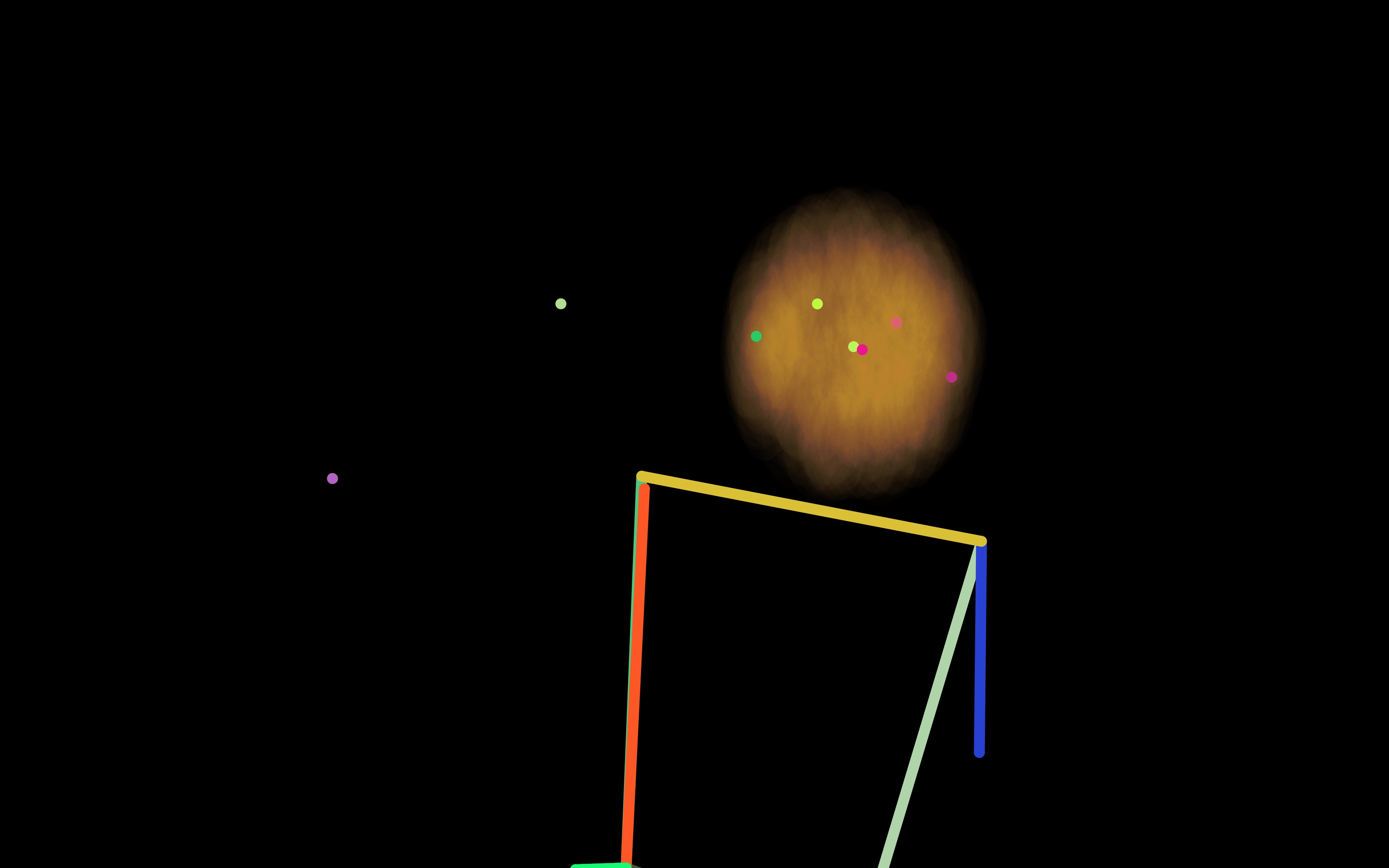

Prototype II

Technology

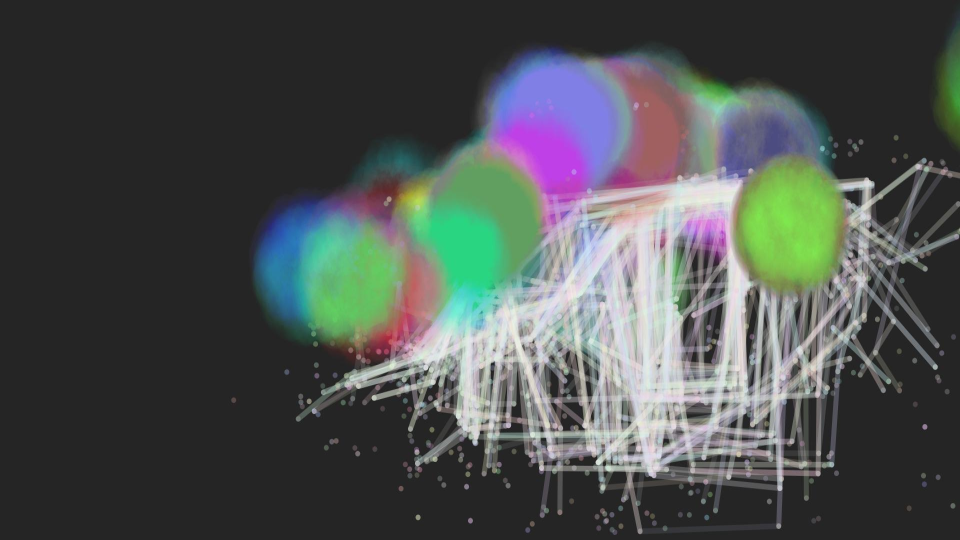

The project leverages PoseNet, a machine learning model from the ml5.js library. PoseNet facilitates real-time human pose estimation directly within the web browser. This open-source library, developed by a collaborative effort of engineers, educators, and artists, is built on TensorFlow.js and programmed using p5.js for creative exploration. Upon loading in the web browser, the product utilizes the user's webcam to capture visual data and estimates their movements in real-time. ml5.js's open-source nature aligns with the project's goal of promoting accessible and creative exploration of machine learning technologies.

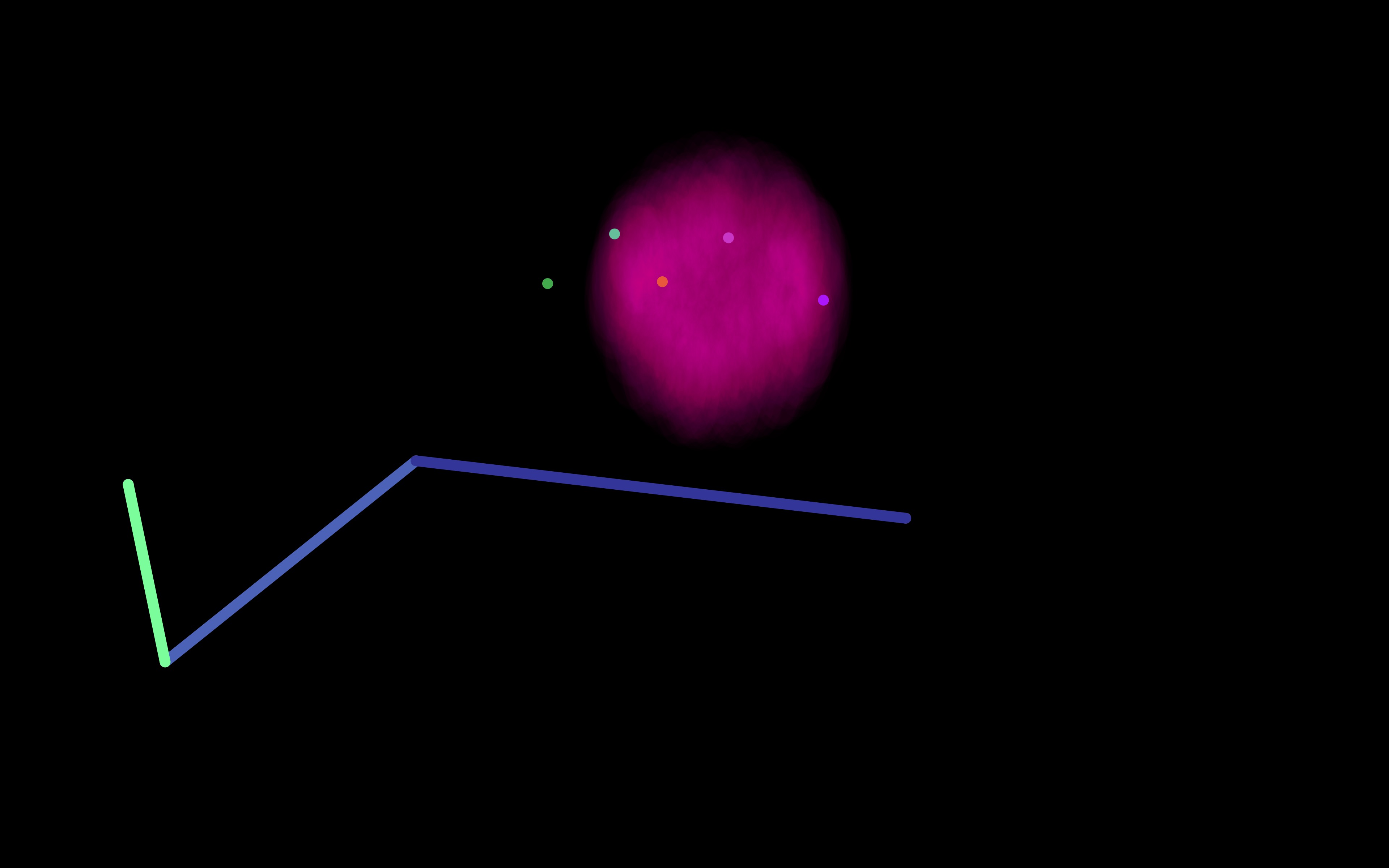

Web Browser

Mobile Browser

V. USABILITY

User Scenario

Interaction with the project is facilitated entirely through the user's body movements. Users can choose to stand or sit, tailoring their experience to their preferences, abilities, or mood. Facing a chosen electronic device, such as a desktop computer or mobile phone, users engage with the sketch by moving their bodies. These movements are captured by the system and translated into real-time digital graphics that reflect the user's physical form on the screen.

Testing Environment

User testing will be conducted in person at an indoor or outdoor location with a reliable internet connection. It's important to acknowledge that due to the reliance on visual feedback on the screen, the project is currently not fully accessible for participants with vision disabilities or impairments. While users with visual impairments can participate in the movement aspect and have their body motion data processed, they won't be able to directly experience the resulting digital graphics.

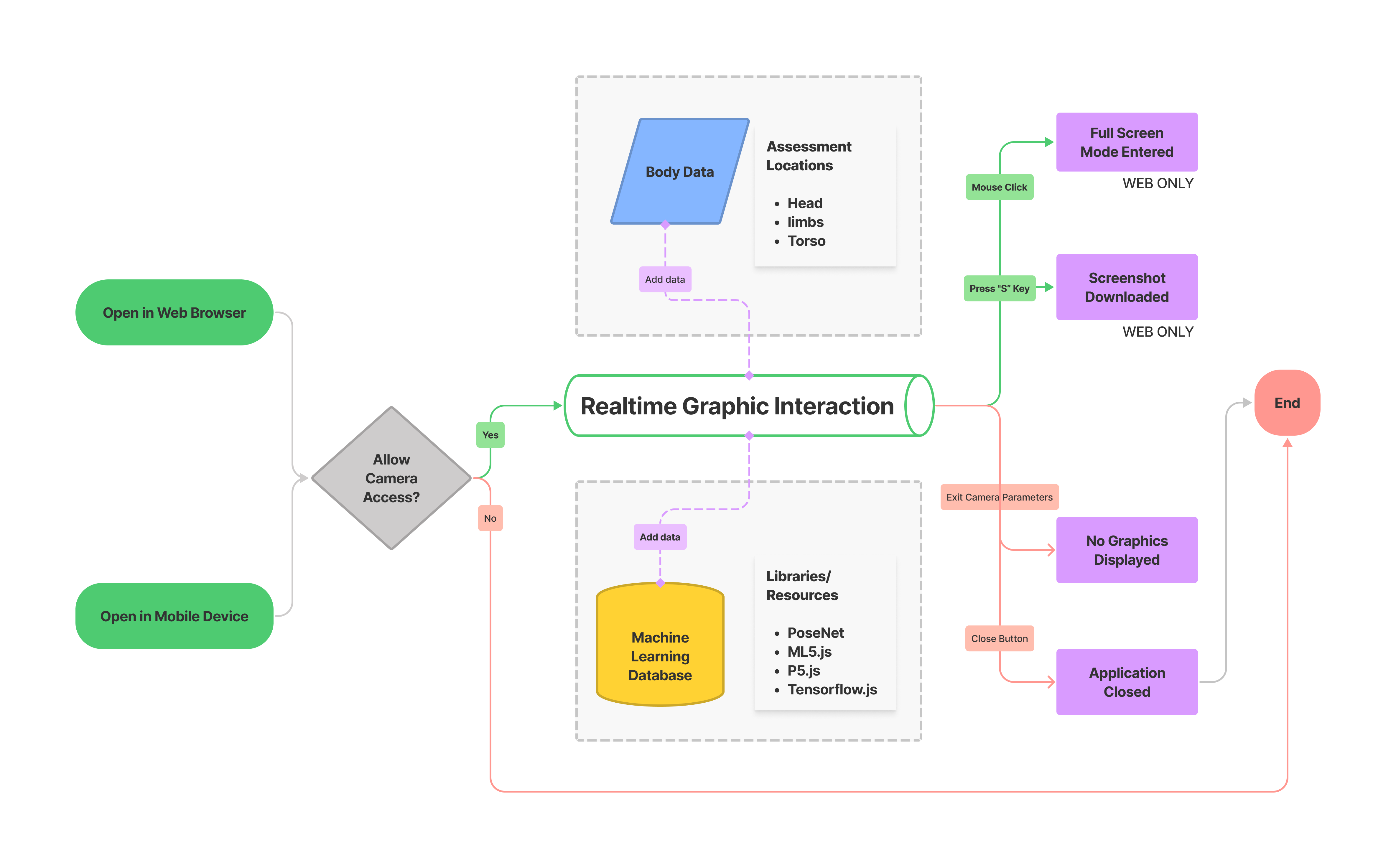

Flowchart

Touchpoints

◍ The user opens the application either on a web browser or in a mobile device

◍ The user is asked to approve of access to the device's camera

◍ The user's body data are captured in the camera

◍ The user's real-time movements are illustrated on the screen

◍ The user views the illustrations of their movement

◍ The user enters full-screen mode (web only)

◍ The user saves a screenshot of one or more moments of choice (web only)

Disclaimer

Visual Warning: This work utilizes flashing lights and rapidly changing colors. Viewer discretion is advised for those with photosensitive epilepsy or visual sensitivities.

VI. REFLECTION

Analysis

My analysis hinges on the interconnected concepts of agency and authorship. Agency, as defined by Merriam-Webster, refers to the "capacity, condition, or state of acting or of exerting power." In the context of this project, it compels us to consider the degree of control and decision-making exercised by both myself and the machine collaborator. Authorship, on the other hand, focuses on the "act of writing, creating, or causing." Here, I examine the attribution of creative responsibility for the final product.

This project blurs the lines between traditional notions of singular human agency and authorship. Through our collaborative process, the machine and I co-create the artwork. I act as a curator, shaping the user experience and guiding the exploration, while the machine contributes its unique capabilities for real-time pose estimation and visual generation. By analyzing user interactions and the emergent qualities of the artwork, I aim to illuminate the complex interplay of human and machine agency in this creative partnership. Ultimately, this exploration challenges traditional models of authorship, suggesting a more nuanced understanding of how authorship can be distributed and shared in an AI-driven creative environment.

"Agency"

Through the lens of Actor-Network Theory (ANT), my project highlights the concept of shared agency in creative production. ANT posits that "humans are not privileged among countless actors/actants, all of which are ontologically symmetrical." In this project, both the human and the machine collaborator contribute equally to the creative process. Their immediate, simultaneous, and irreplaceable presence is crucial for the artwork's existence. Neither human nor machine can sustain the sketch independently – each relies on the other's capabilities to generate the final product.

This shared agency is further emphasized by ANT's notion that "actors/actants gain strength only through their alliances." The human's agency encompasses not only creating physical movements but also responding to the visual feedback on the screen, adapting their actions based on what they see. Similarly, the machine's agency extends beyond generating digital drawings; it continuously registers the user's changing motions, influencing the artwork's evolution. This collaborative and interdependent relationship enhances the sense of agency for both human and machine, ultimately enabling the production of real-time, interactive sketches.

"Authorship"

Authorship in this project extends beyond traditional notions of singular creators. Drawing from Actor-Network Theory (ANT), I propose a model of "mutual authorship" between human and machine. ANT suggests that "actors/actants transform to a different state via mediation when they encounter each other." In this collaboration, the human creates physical motions, while the machine translates these movements into real-time drawings. However, this is not a simple exchange. The human's actions are constantly informed by the visual feedback on the screen, and the machine's drawings are contingent upon the user's ongoing movements. This interdependence blurs the lines of authorship, resulting in a singular artwork co-created by human and machine.

ANT further emphasizes the concept of "assemblage," stating that "there is no essence within or beyond any process of assemblage. Actors are concrete; there is no 'potential' other than their actions in the moment. Entities are nothing more than an effect of assemblage." In the context of this project, the only active "actants" are the human user and the operative computer system. External factors, such as other devices or waiting participants, are not part of the immediate assemblage that produces the artwork.